Explanation of the principle of quantification scheme#

As mentioned earlier, quantization is to convert models based on floating-point data types into fixed-point numbers for operations. The core is how to use fixed-point numbers to represent floating-point numbers in the model, and how to use fixed-point operations to represent corresponding floating-point operations. Taking float32 to uint8 as an example, one of the simplest conversion methods is to directly discard the decimal part of float32, only take the integer part, and use 0 or 255 for the value beyond the range of (0,255). This scheme is obviously inappropriate, especially after the deep neural network is processed by bn, the output of the middle layer is basically a data range of 0 mean and 1 variance. Under this scheme, because the decimal part is discarded, it will bring Comes a lot of accuracy loss. And because the parts other than (0,255) are clipped to 0 or 255, when the floating point number is negative or greater than 255, it will cause huge errors.

This scheme is closer to the type conversion logic in our common programming languages, and we can call it a type conversion scheme. From the above analysis, it can be seen that the type conversion scheme will produce a large loss of accuracy for data that is too large or too small.

At present, the mainstream floating-to-fixed-point scheme basically uses uniform quantization, because this scheme is more friendly to reasoning. A floating-point number is uniformly mapped to the expression range of a fixed-point number according to its range.

Uniform quantization scheme#

我们假设一个浮点数x的值域范围为$\{x_{min}, x_{max}\}$,要转换到一个表达范围为(0,255)的8bit定点数的转换公式如下

$$x_{int} = round(x/s) + z$$ $$x_{Q} = clamp(0,255,x_{int})$$

Among them,$s$is scale, also called step size, which is a floating point number.$z$is the zero point, that is, the 0 in the floating point number, which is a fixed point number. $$scale = (x_{max} -x_{min}) / 255$$ $$z = round(0-x_{min}) / 255$$

From the above, it can be seen that the uniform quantization scheme can express relatively good performance for any range of values, and there will be no case that the type conversion scheme loses accuracy in the small value range and cannot be represented by the large value range. The cost is that two additional variables,$z$and range$s$At the same time, we can see that the uniform quantization scheme$round$and$clamp$operations, so it will have an impact on the performance of the model. How to reduce the precision loss of data conversion from floating point to fixed point is the focus of the entire quantification research.

``It is important to pay attention to the zero point, because the padding, relu and other operations of our network model are more sensitive to 0 and need to be quantified correctly to ensure the correctness of the converted fixed-point operation. When the value range of the floating-point number does not contain the zero point, in order to ensure the correct quantization, we need to scale the value range to a certain extent so that it can include 0 points.

The inverse quantization formula corresponding to the uniform quantization scheme is as follows: $$x_{float} = (x_{Q} -z) * s$$

Therefore, the floating-point number after quantization and dequantization has a certain error with the original floating-point number. The difference in this process can be seen in the figure below. Quantization discretizes the parameters of our network model. The degree of influence of this operation on the final number of points of the model depends on the difference between the parameter distribution of our model itself and the uniform distribution. Here you need to insert a picture.

Next, let’s take a look at how to use a quantized fixed-point convolution operation to represent a primitive floating-point convolution operation

Among them,$k,l,m,n$分别是$kernel\_size, output\_channel$和$input\_channel$ traversal subscript. It can be seen that when the input of the convolution and the zero_point of the parameter are both 0, the floating-point convolution will be simplified to

$$ conv(x, w) = s_{x}s_{w} (conv(x_{Q}, w_{Q})) $$ That is, the fixed-point convolution operation result has only one scale deviation from the actual output, which greatly simplifies the fixed-point operation logic , So in most cases we use symmetric uniform quantization.

$zero\_point$ corresponding to the fixed-point quantization at the integer 0, it is symmetric uniform quantization. Let’s take the fixed-point number of int8 as an example (select int8 just to look more symmetrical, and uint8 is also possible), the quantization formula is as follows

For the purpose of using faster SIMD implementation, we will express the fixed-point range of the convolutional weight as (-127,127), and the corresponding inverse quantization operation is

$$ x_{float} = x_{Q}*s $$

It can be seen that the quantization and dequantization operations of symmetric uniform quantization will be more convenient. Besides, there are other quantization methods such as random uniform quantization. Because in most cases we use symmetric uniform quantization, we will not expand the description here. .

Note

When megengine uses the simd instruction to implement quantization, some kernels use a 16-bit accumulator to store the value of a*b+c*d (that is, the value of the multiplication result accumulated once), where a, b, c, d is all qint8. It is not difficult to find that the above values may overflow if and only if a, b, c, d are all -128. As long as this situation is avoided, there will be no overflow problem. Since there must be two values in a, b, c, and d as weight, our traditional approach is to define the quantization range of weight as [-127, 127]

Value range statistics#

The key points in the introduction of uniform quantization above are$scale$and$zero\_point$, and they are determined by the range of floating-point numbers. How do we determine the value range of each data that needs to be quantified in the network? Generally, there are the following two solutions:

One is to manually set the range of values based on experience. We can do this when there is a lack of data or for some intermediate features.

Another is to run a batch of small amounts of data and set it according to statistics. Here the statistical method can be determined by the characteristics of the data.

Quantitative perception training#

In the section of uniform quantization, we mentioned that the error before and after quantization mainly depends on the model parameters and the difference between the activation value distribution and the uniform distribution. For a quantitatively friendly model, we only need to obtain the range of its range through range statistics, and then call the corresponding quantization scheme to fix it. However, for models that are not friendly to quantization, direct quantization will cause the final model to have too low a correct rate and cannot be used because of large errors. Is there a way to improve the model’s friendliness to quantization during training?

The answer is yes. We can introduce the accuracy loss caused by quantization by quantizing and dequantizing the parameters to be quantized during the training process, and then through training, the network gradually adapts to this interference, so that the network is The real quantified performance is consistent with the training performance. This operation is called quantization awareness training, also called qat (Quantization-aware-training)

It should be noted that because the quantization operation is not diversified, a one-step approximation was made during the actual training, and the derivative of the previous layer was directly skipped over the quantization and inverse quantization operation and passed to the current parameters.

Quantitative network reasoning process#

The above describes the form of the convolution operation in the fixed-point case. You can derive the activation function relu in the fixed-point case by yourself. For bn, because most networks will absorb bn, we can integrate it into conv.

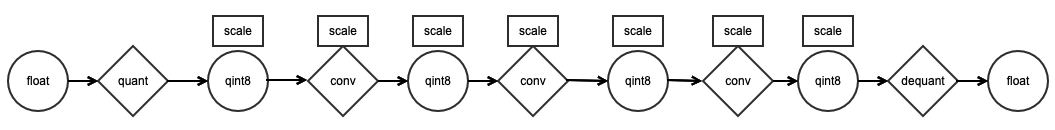

For off-the-shelf networks, we can add quantization and inverse quantization operations before and after each convolutional layer, thus achieving the purpose of replacing floating-point operations with fixed-point operations. Furthermore, we can maintain the scale variable corresponding to each quantified variable during the entire network reasoning process, so that we can walk the entire network without dequantization, so that we in addition to bring a very small amount of additional scale calculation overhead In addition, the floating-point operations of the entire network can be converted into corresponding fixed-point operations. The specific process can refer to the figure below.

Most of the operations involved in range statistics and quantitative perception training take place in the training phase. Megengine provides corresponding packages for these two operations and does not require us to implement them manually

So far we have roughly introduced the fixed-point conversion of the entire network quantification and the calculation scheme after conversion.

Reference: https://arxiv.org/pdf/1806.08342.pdf