Tensor data type#

See also

In computer science, data types are responsible for telling the compiler or interpreter how the programmer intends to use the data. Refer to Data type WIKI.

MegEngine uses numpy.dtype to represent the basic data type, refer to the following:

There is a special implementation of

numpy.dtypein NumPy, please refer to its explanation of Data type objects;NumPy official Data types document explains the array types and conversion rules.

According to MEP 3 – Tensor API 设计规范, MegEngine will refer to <https://data-apis.org/array-api/latest/API_specification/data_types.html>`_ in the “Array API Standard”.

The data type (Data type, dtype) mentioned above is a basic attribute of Tensor. The data types of elements in a single Tensor are exactly the same, and the memory space occupied by each element is also the same. Tensor data type can be specified at creation time, or can be specified from an existing Tensor for conversion, in this case dtype is used as a parameter . float32 is the most frequently used Tensor data type in MegEngine.

>>> a = megengine.functional.ones(5)

>>> a.dtype

numpy.float32

data type support#

All data types required in the “Array API Standard” are not yet supported in MegEngine, and the current status is as follows:

type of data |

numpy.dtype |

Equivalent string |

Numerical interval |

Support situation |

|---|---|---|---|---|

Boolean |

|

|

|

✔ |

Signed 8-bit integer |

|

\([-2^{7}, 2^{7}-1]\) |

✔ |

|

Signed 16-bit integer |

|

\([−2^{15}, 2^{15}-1]\) |

✔ |

|

Signed 32-bit integer |

|

\([−2^{31}, 2^{31}-1]\) |

✔ |

|

Signed 64-bit integer |

|

\([−2^{64}, 2^{64}-1]\) |

✖ |

|

Unsigned 8-bit integer |

|

\([0, 2^{8}-1]\) |

✔ |

|

Unsigned 16-bit integer |

|

\([0, 2^{16}-1]\) |

✔ |

|

Unsigned 32-bit integer |

|

\([0, 2^{32}-1]\) |

✖ |

|

Unsigned 64-bit integer |

|

\([0, 2^{64}-1]\) |

✖ |

|

Half-precision floating point |

``float16’’ |

IEEE 754 [1] |

✔ |

|

Single precision floating point |

``float32’’ |

IEEE 754 [1] |

✔ |

|

double precision floating point |

|

IEEE 754 [1] |

✖ |

New in version 1.7: Added support for the uint16 type.

Warning

并不是所有的已有算子都支持上述 MegEngine 数据类型之间的计算(仅保证 float32 类型全部可用)。

这可能对一些实验或测试性的样例代码造成了不便,例如 matmul 运算不支持输入均为 int32 类型,

用户如果希望两个 int32 类型的矩阵能够进行矩阵乘法,则需要手动地对它们进行显式类型转换:

a = Tensor([[1, 2, 3], [4, 5, 6]]) # shape: (3, 2)

b = Tensor([[1, 2], [3, 4], [5, 6]]) # shape: (2, 3)

c = F.matmul(a, b) # unsupported MatMul(Int32, Int32) -> invalid

c = F.matmul(a.astype("float32"), b.astype("float32")) # OK

类似的情况可能会让人产生疑惑,已有算子为什么不支持所有的数据类型?理想情况下应当如此。

但对各种数据类型的适配和优化会造成代码体积的膨胀,因此一般只对最常见的数据类型进行支持。

继续以 int32 的矩阵乘法为例,在实际的矩阵乘情景中其实很少使用到 int32 类型,

原因包括计算结果容易溢出等等,目前最常见的是 float32 类型,也是算子支持最广泛的类型。

注意:上述类型转换将会导致精度丢失,使用者需要考虑到其影响。

Note

We will mention support for quantized data types :mod:

default data type#

MegEngine 中对 Tensor 默认数据类型的定义如下:

The default floating point data type is

float32;The default integer data type is

int32;The default index data type is

int32.

dtype is used as a parameter#

Tensor initialization and call :ref:` create the Tensor <tensor-creation>acceptable parameters `` dtype` function to specify the data type:

>>> megengine.Tensor([1, 2, 3], dtype="float32")

Tensor([1. 2. 3.], device=xpux:0)

>>> megengine.functional.arange(5, dtype="float32")

Tensor([0. 1. 2. 3. 4.], device=xpux:0)

If a Tensor is created with existing data without specifying dtype, the data type of the Tensor will be deduced from default data type:

>>> megengine.Tensor([1, 2, 3]).dtype

int32

Warning

If you use an unsupported type of NumPy array as input to create a MegEngine Tensor, unexpected behavior may occur. Therefore, it is best to specify the ``dtype’’ parameter every time when doing similar conversions, or first convert the NumPy array to a supported data type.

In addition, you can use the astype method to get the converted Tensor (the original Tensor remains unchanged):

>>> megengine.Tensor([1, 2, 3]).astype("float32")

Tensor([1. 2. 3.], device=xpux:0)

Type promotion rules#

Note

The MEP 3 – Tensor API 设计规范, with reference to the type of rules should enhance regulations` “array API Standards” <https://data-apis.org/array-api/latest/API_specification/type_promotion.html>`_ :

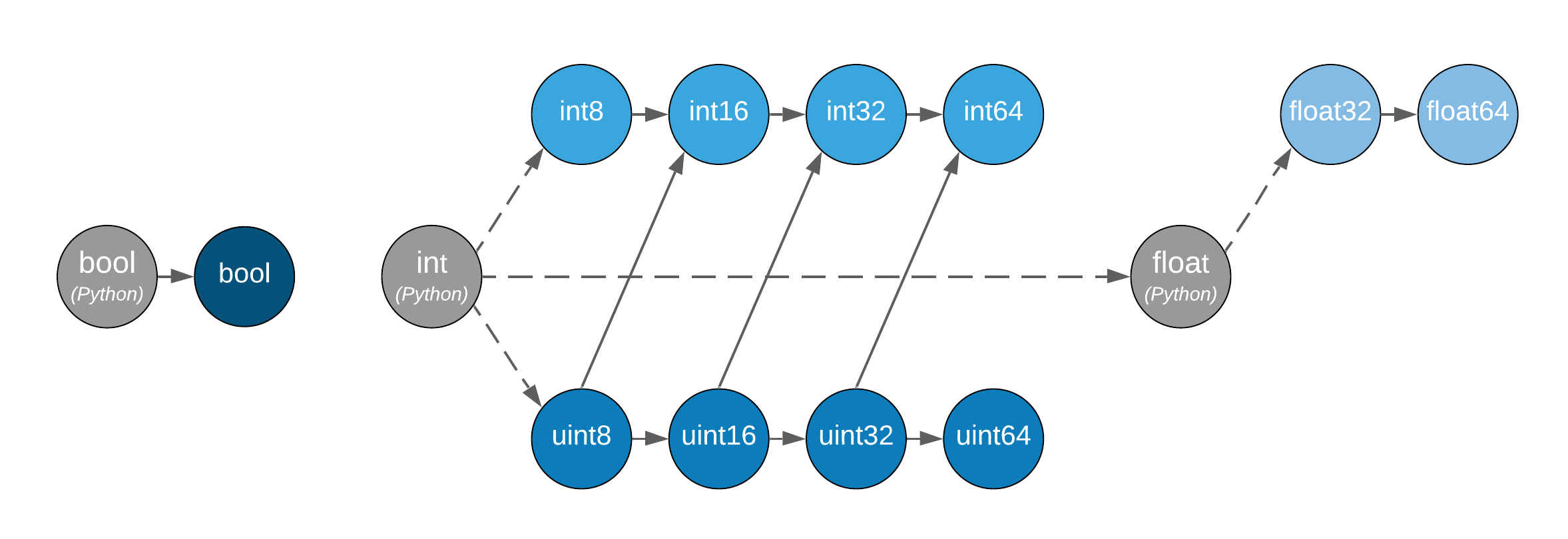

When multiple Tensor or Python scalars of different data types are used as operands to participate in the operation, the result type returned is determined by the relationship shown in the above figure-ascending in the direction of the arrow, converging to the nearest data type, and using it as the return type.

The key to determining type promotion is the type of data involved in the operation, not their value;

The dotted line in the figure indicates that the behavior of the Python scalar is undefined when it overflows;

There is no connection between Boolean, integer, and floating-point

dtypes, indicating that mixed type promotion is undefined.

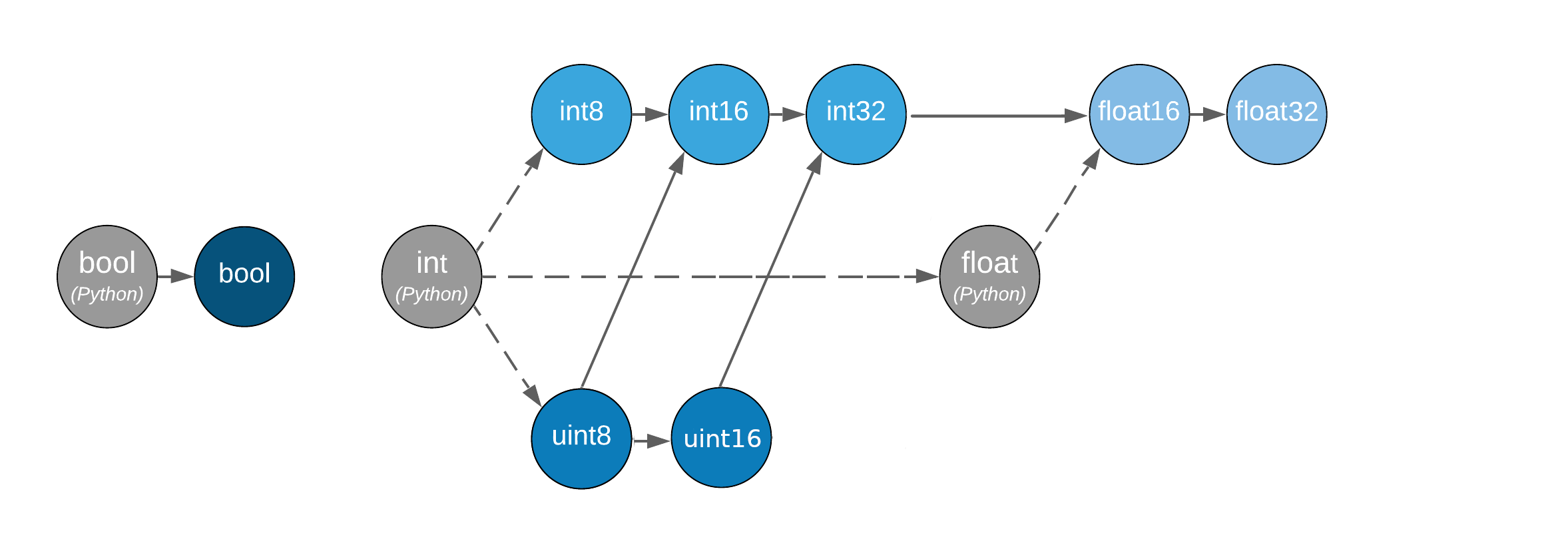

In MegEngine, since all types in the “standard” are not yet supported, the current promotion rules are shown in the following figure.:

Following the principle of type first, there is a mixed type promotion rule of bool -> int -> float;

When the Python scalar type is mixed with Tensor, it is converted to Tensor data type;

The Boolean type

dtypeis not connected to other types, indicating that the related mixed type promotion is undefined.

Note

The type promotion rules discussed here are mainly applicable to the case of Element-wise operations (Element-wise).

For example, the operation of Tensor of type uint8 and int8 will return Tensor of type int16:

>>> a = megengine.Tensor([1], dtype="int8") # int8 -> int16

>>> b = megengine.Tensor([1], dtype="uint8") # uint8 -> int16

>>> (a + b).dtype

numpy.int16

int16 and ``float32’’ type Tensor operations will return ``float32’’ type Tensor:

>>> a = megengine.Tensor([1], dtype="int16") # int16 -> int32 -> float16 -> float32

>>> b = megengine.Tensor([1], dtype="float32")

>>> (a + b).dtype

numpy.float32

When the Python scalar and Tensor are mixed together, if the types are the same, the Python scalar will be converted to the corresponding Tensor data type:

>>> a = megengine.Tensor([1], dtype="int16")

>>> b = 1 # int -> a.dtype: int16

>>> (a + b).dtype

numpy.int16

Note that if the Python scalar is of type float, and Tensor is of type ``int’’ at this time, it will be increased by:

>>> a = megengine.Tensor([1], dtype="int16")

>>> b = 1.0 # Python float -> float32

>>> (a + b).dtype

numpy.float32

At this point Python scalars are converted to float32 Tensors by using default data type.